Research

In brief

I’m interested in applying insights from computational modeling and mathematical formalization to issues in theoretical linguistics (especially syntax), language acquisition and processing. I also explore the application of such methods to problems in Natural Language Processing. This happens in the context of the Computational Linguistics at Yale (CLAY) lab, whose website you can consult for more details.

Less briefly

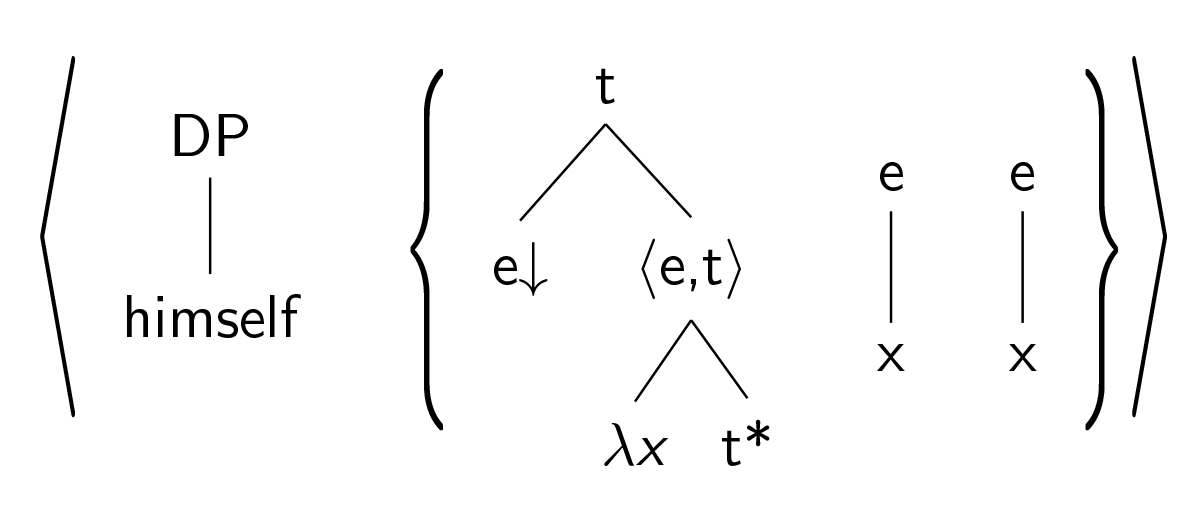

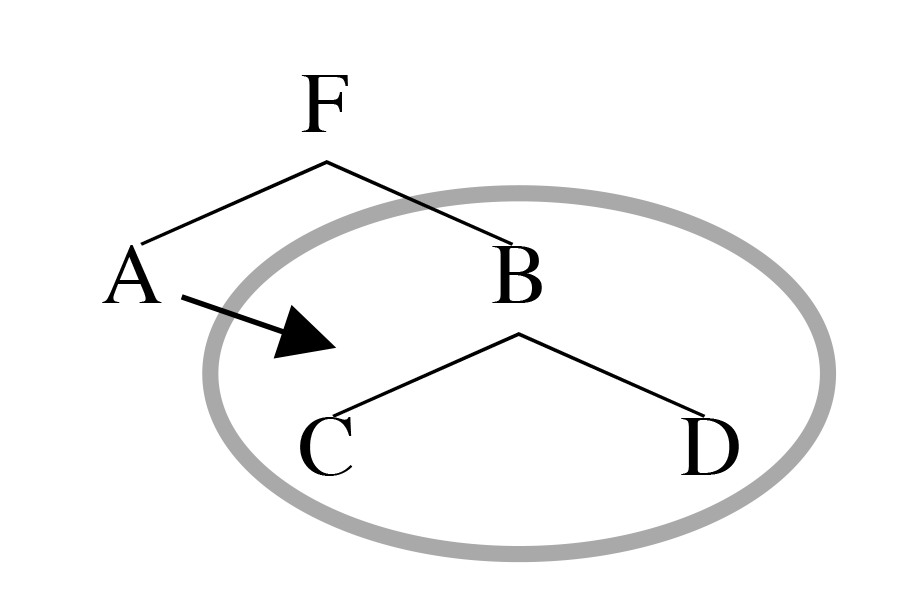

My work in the area of theoretical linguistics focuses primarily on the relationship between constraints on natural language grammar and notions of mathematical and computational restrictiveness. Most of my work to date has focused on issues in syntax. For some time, I have been investigating the implications of adopting the Tree Adjoining Grammar (TAG) formalism into syntactic theory. I have shown that the restrictiveness of the TAG formalism’s operations for building phrase structure and representing the syntax-semantic interface derives a number of non-trivial properties of human grammar, and yields elegant and explanatory analyses of a considerable range of phenomena. I am also interested in the relationship between TAG and related formalisms with similar generative capacity (e.g., Minimalist Grammars and CCG), and the consequences of taking these systems as meta-languages for grammatical description.

In the realm of mathematical linguistics, I have studied alternative characterizations of syntactic structure, exploring the implications of taking phrase markers to be defined in terms of a primitive c-command relation. I have ventured into the domain of phonology as well, characterizing some of the mathematical properties of Optimality Theory.

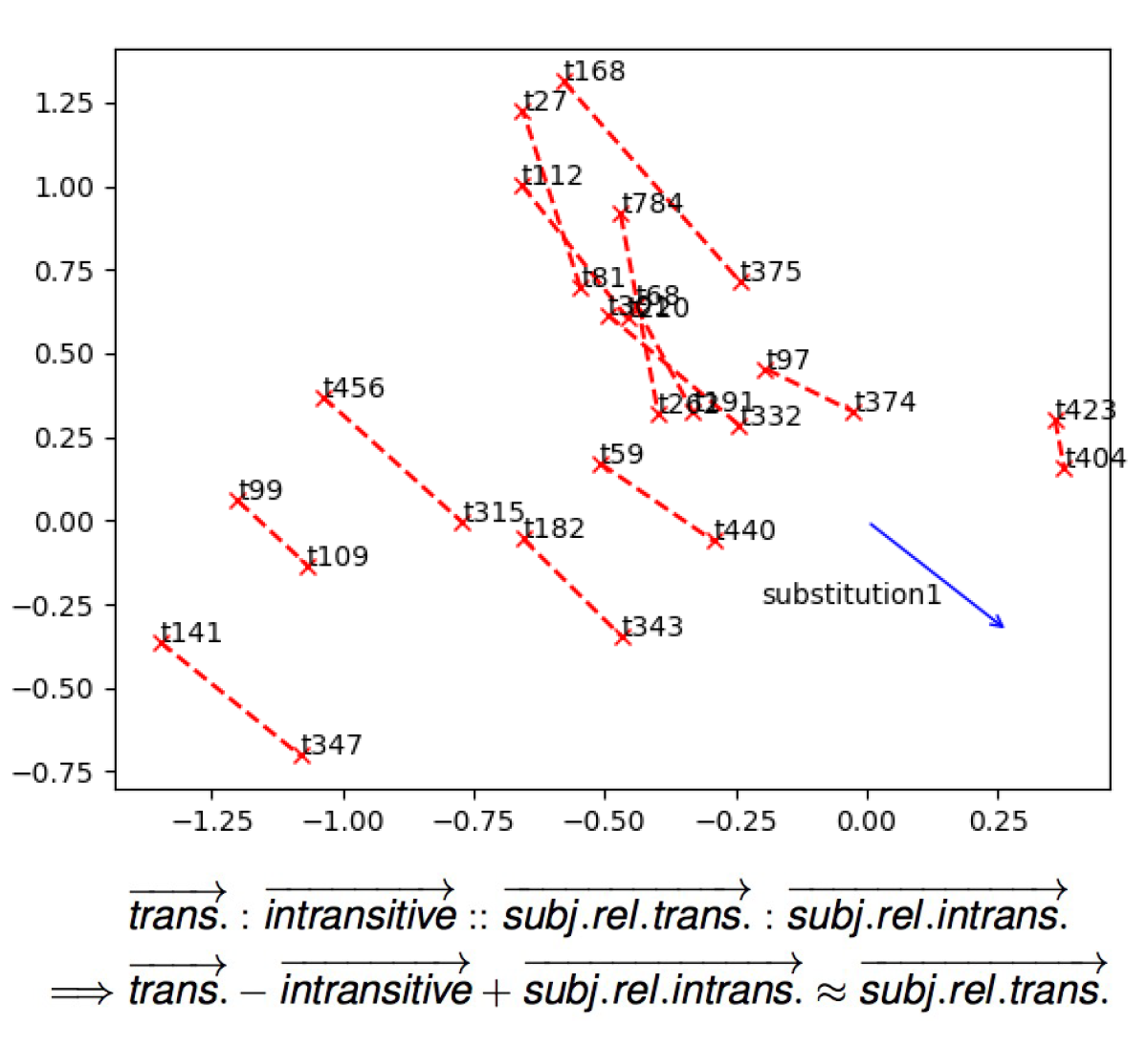

This theoretical work serves as a springboard for investigations of issues in language acquisition and processing. For some time, I have been exploring the capacity of neural network models to draw correct inductive inferences about detailed properties of grammar, in the domains of anaphora and displacement. I also study whether such models can exploit statistical regularities in the input to overcome classical arguments from the poverty of the stimulus.

In the area of Natural Language Processing, my group has been combining the methods discussed above, for example, applying neural network models to the problems of TAG supertagging, parsing, semantic role labeling and textual entailment. We are also exploring the properties of novel neural network architectures.